Abstract

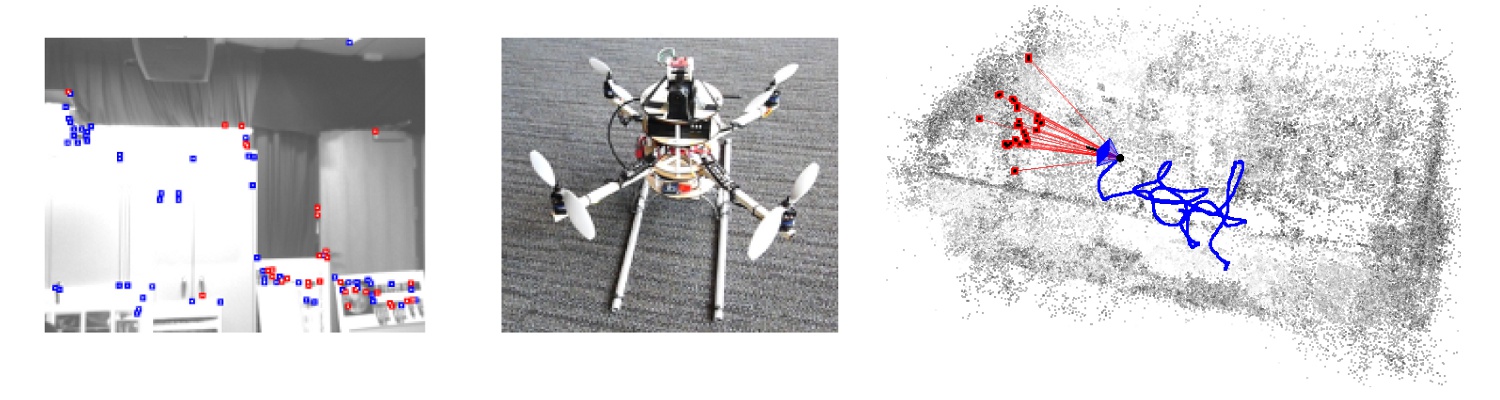

We present a real-time approach for image-based localization

within large scenes that have been reconstructed

offline using structure from motion (Sfm). From monocular

video, our method continuously computes a precise 6-

DOF camera pose, by efficiently tracking natural features

and matching them to 3D points in the Sfm point cloud.

Our main contribution lies in efficiently interleaving a fast

keypoint tracker that uses inexpensive binary feature descriptors

with a new approach for direct 2D-to-3D matching.

The 2D-to-3D matching avoids the need for online

extraction of scale-invariant features. Instead, offline we

construct an indexed database containing multiple DAISY

descriptors per 3D point extracted at multiple scales. The

key to the efficiency of our method lies in invoking DAISY

descriptor extraction and matching sparingly during localization,

and in distributing this computation over a window

of successive frames. This enables the algorithm to run in

real-time, without fluctuations in the latency over long durations.

We evaluate the method in large indoor and outdoor

scenes. Our algorithm runs at over 30 Hz on a laptop

and at 12 Hz on a low-power, mobile computer suitable for

onboard computation on a quadrotor micro aerial vehicle.

@inproceedings {LIM-CVPR12,

author = "Hyon Lim and Sudipta N. Sinha and

Michael F. Cohen and Matthew Uyttendaele",

title = "Real-time Image-based 6-DOF Localization in Large-Scale Environments",

booktitle = "IEEE Computer Society Conference on Computer

Vision and Pattern Recognition (CVPR 2012)",

location = "Providence, RI",

month = "June",

year = "2012",

}

|

|

|