|

Abstract

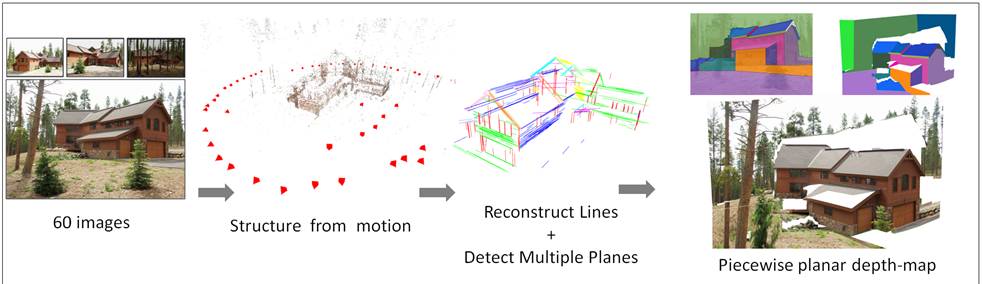

We present a novel multi-view

stereo method designed for image-based rendering that generates piecewise

planar depth maps from an unordered collection of photographs. First a

discrete set of 3D plane candidates are computed based on a sparse point

cloud of the scene (recovered by structure from motion) and sparse 3D line

segments reconstructed from multiple views. Next, evidence is accumulated for

each plane using 3D point and line incidence and photo-consistency cues.

Finally, a piecewise planar depth map is recovered for each image by solving

a multi-label Markov Random Field (MRF) optimization problem using

graph-cuts. Our novel energy minimization formulation exploits high-level

scene information. It incorporates geometric constraints derived from

vanishing directions, enforces free space violation constraints based on ray

visibility of 3D points and 3D lines and imposes smoothness priors specific

to planes that intersect.

We

demonstrate the effectiveness of our approach on a wide variety of outdoor

and indoor datasets. The view interpolation results are perceptually pleasing,

as straight lines are preserved and holes are minimized even for challenging

scenes with non-Lambertian and texture-less surfaces.

|

|

Paper

Video (Divx)

More Results

|